TL;DR

Humans easily decode unfamiliar numeral systems—can LLMs? We find they fail unless operations are explicitly marked with symbols. Unlike humans, LLMs lack the ability to infer implicit compositional structure from patterns in numerals.

Abstract

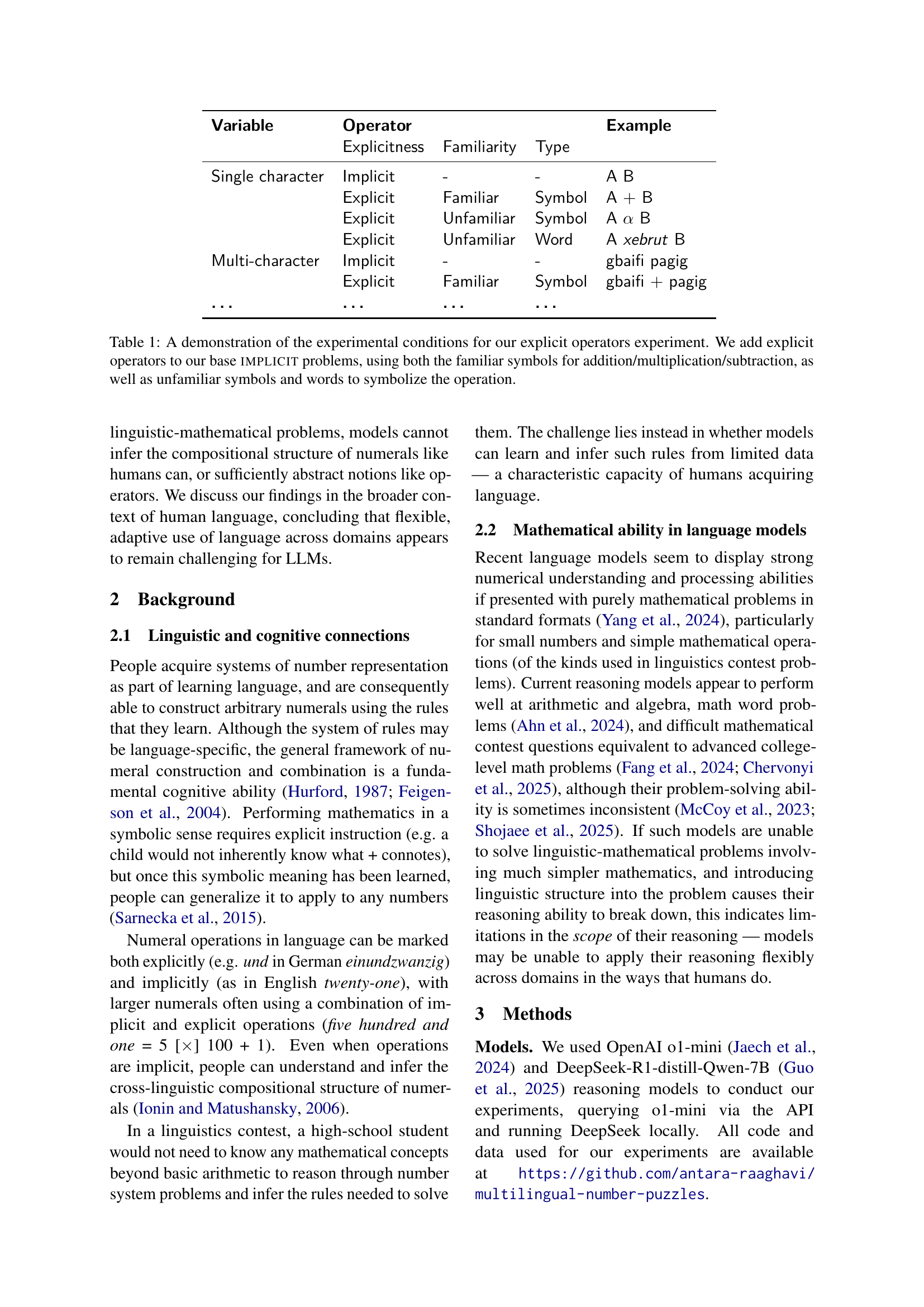

Across languages, numeral systems vary widely in how they construct and combine numbers. While humans consistently learn to navigate this diversity, large language models (LLMs) struggle with linguistic-mathematical puzzles involving cross-linguistic numeral systems, which humans can learn to solve successfully. We investigate why this task is difficult for LLMs through a series of experiments that untangle the linguistic and mathematical aspects of numbers in language. Our experiments establish that models cannot consistently solve such problems unless the mathematical operations in the problems are explicitly marked using known symbols (+, ×, etc.).

Citation

@inproceedings{bhattacharya2025investigating,

title={Investigating the interaction of linguistic and mathematical reasoning in language models using multilingual number puzzles},

author={Bhattacharya, Antara Raaghavi and Papadimitriou, Isabel and Davidson, Kathryn and Alvarez-Melis, David},

booktitle={Proceedings of the 2025 Conference on Empirical Methods in Natural Language Processing},

year={2025}

}