TL;DR

Fixed tokenizers hurt performance on new domains like proteins. S2T2 learns to translate between a domain-specific tokenizer and the original, enabling better compression and semantics without retraining the whole model—and translations transfer across model sizes.

Abstract

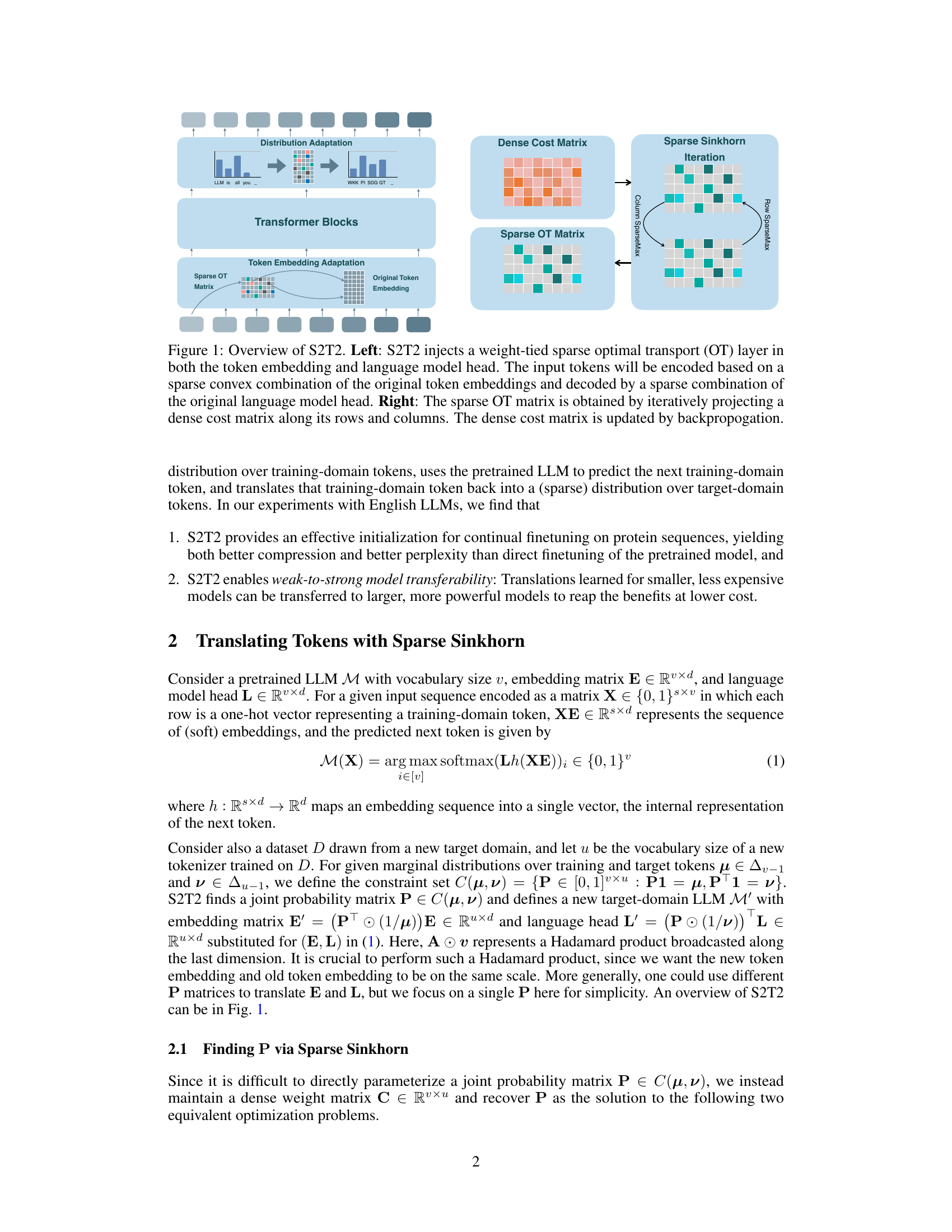

Modern large language models use a fixed tokenizer to effectively compress text drawn from a source domain. However, applying the same tokenizer to a new target domain often leads to inferior compression, more costly inference, and reduced semantic alignment. To address this deficiency, we introduce Sparse Sinkhorn Token Translation (S2T2). S2T2 trains a tailored tokenizer for the target domain and learns to translate between target and source tokens, enabling more effective reuse of the pre-trained next-source-token predictor. In our experiments with finetuned English language models, S2T2 improves both the perplexity and the compression of out-of-domain protein sequences, outperforming direct finetuning with either the source or target tokenizer. In addition, we find that token translations learned for smaller, less expensive models can be directly transferred to larger, more powerful models to reap the benefits of S2T2 at lower cost.

Citation

@article{feng2024s2t2,

title={Adapting Language Models via Token Translation},

author={Feng, Zhili and Marwah, Tanya and Fusi, Nicol{\`o} and Alvarez-Melis, David and Mackey, Lester},

journal={arXiv preprint arXiv:2411.00593},

year={2024}

}